Using Amazon S3 with the AWS.NET API Part 3: code basics cont’d

January 8, 2015 4 Comments

Introduction

In the previous post we looked at some basic code examples for Amazon S3: list all buckets, create a new bucket and upload a file to a bucket.

In this post we’ll continue with some more code examples: downloading a resource, deleting it and listing the available objects.

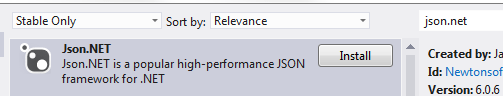

We’ll extend the AmazonS3Demo C# console application with reading, listing and deleting objects.

Listing files in a bucket

The following method in S3DemoService.sc will list all objects within a bucket:

public void RunObjectListingDemo()

{

using (IAmazonS3 s3Client = GetAmazonS3Client())

{

try

{

ListObjectsRequest listObjectsRequest = new ListObjectsRequest();

listObjectsRequest.BucketName = "a-second-bucket-test";

ListObjectsResponse listObjectsResponse = s3Client.ListObjects(listObjectsRequest);

foreach (S3Object entry in listObjectsResponse.S3Objects)

{

Console.WriteLine("Found object with key {0}, size {1}, last modification date {2}", entry.Key, entry.Size, entry.LastModified);

}

}

catch (AmazonS3Exception e)

{

Console.WriteLine("Object listing has failed.");

Console.WriteLine("Amazon error code: {0}",

string.IsNullOrEmpty(e.ErrorCode) ? "None" : e.ErrorCode);

Console.WriteLine("Exception message: {0}", e.Message);

}

}

}

We use a ListObjectsRequest object to retrieve all objects from a bucket by providing the bucket name. For each object we print the key name, the object size and the last modification date, simple as that. In the previous post I uploaded a file called logfile.txt to the bucket called “a-second-bucket-test”. Accordingly, calling this method from Main…

static void Main(string[] args)

{

S3DemoService demoService = new S3DemoService();

demoService.RunObjectListingDemo();

Console.WriteLine("Main done...");

Console.ReadKey();

}

…yields the following output:

Found object with key logfile.txt, size 4490, last modification date 2014-12-06 13:25:45.

The ListObjectsRequest function provides some basic search functionality. The “Prefix” property will limit the search results to those objects whose names start with that prefix, e.g.:

listObjectsRequest.Prefix = "log";

That will find all objects whose key names start with “log”, i.e. logfile.txt is still listed.

You can list a limited number of elements, say 5:

listObjectsRequest.MaxKeys = 5;

You can also set a marker, meaning that the request will only list the files whose keys come after the marker value alphabetically:

listObjectsRequest.Marker = "leg";

This will find “logfile.txt”. However a marker value of “lug” won’t.

Download a file

Downloading a file from S3 involves reading from a Stream, a standard operation in the world of I/O. The following function will load the stream from logfile.txt, print its metadata and convert the downloaded byte array into a string:

public void RunDownloadFileDemo()

{

using (IAmazonS3 s3Client = GetAmazonS3Client())

{

try

{

GetObjectRequest getObjectRequest = new GetObjectRequest();

getObjectRequest.BucketName = "a-second-bucket-test";

getObjectRequest.Key = "logfile.txt";

GetObjectResponse getObjectResponse = s3Client.GetObject(getObjectRequest);

MetadataCollection metadataCollection = getObjectResponse.Metadata;

ICollection<string> keys = metadataCollection.Keys;

foreach (string key in keys)

{

Console.WriteLine("Metadata key: {0}, value: {1}", key, metadataCollection[key]);

}

using (Stream stream = getObjectResponse.ResponseStream)

{

long length = stream.Length;

byte[] bytes = new byte[length];

int bytesToRead = (int)length;

int numBytesRead = 0;

do

{

int chunkSize = 1000;

if (chunkSize > bytesToRead)

{

chunkSize = bytesToRead;

}

int n = stream.Read(bytes, numBytesRead, chunkSize);

numBytesRead += n;

bytesToRead -= n;

}

while (bytesToRead > 0);

String contents = Encoding.UTF8.GetString(bytes);

Console.WriteLine(contents);

}

}

catch (AmazonS3Exception e)

{

Console.WriteLine("Object download has failed.");

Console.WriteLine("Amazon error code: {0}",

string.IsNullOrEmpty(e.ErrorCode) ? "None" : e.ErrorCode);

Console.WriteLine("Exception message: {0}", e.Message);

}

}

}

Run it from Main:

demoService.RunDownloadFileDemo();

In my case there was only one metadata entry:

Metadata key: x-amz-meta-type, value: log

…which is the one I attached to the file in the previous post.

In the above case we know beforehand that we’re reading text so the bytes could be converted into a string. However, this is of course not necessarily the case as you can store any file type on S3. The GetObjectResponse has a method which allows you to save the stream into a file:

getObjectResponse.WriteResponseStreamToFile("full file path");

…which has an overload to append the stream contents to an existing file:

getObjectResponse.WriteResponseStreamToFile("full file path", true);

Deleting a file

Deleting an object from S3 is just as straightforward as uploading it:

public void RunFileDeletionDemo()

{

using (IAmazonS3 s3Client = GetAmazonS3Client())

{

try

{

DeleteObjectRequest deleteObjectRequest = new DeleteObjectRequest();

deleteObjectRequest.BucketName = "a-second-bucket-test";

deleteObjectRequest.Key = "logfile.txt";

DeleteObjectResponse deleteObjectResponse = s3Client.DeleteObject(deleteObjectRequest);

}

catch (AmazonS3Exception e)

{

Console.WriteLine("Object deletion has failed.");

Console.WriteLine("Amazon error code: {0}",

string.IsNullOrEmpty(e.ErrorCode) ? "None" : e.ErrorCode);

Console.WriteLine("Exception message: {0}", e.Message);

}

}

}

Calling this from Main…

demoService.RunFileDeletionDemo();

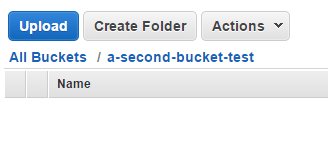

…removes the previously uploaded logfile.txt from the bucket:

In the next post we’ll see how to work with folders in code.

View all posts related to Amazon Web Services and Big Data here.